As artificial intelligence (AI) takes deeper roots in our daily lives it directly impacts multiple sectors, including mental health care. As AI tools become more integrated into therapeutic practices, questions arise about their ability to provide effective therapy. Can AI offer the same level of support as human therapists, and is there a future where it could potentially replace them altogether? Experts and therapists from Hyderabad and different parts of the world explore the potential benefits and limitations of AI in the mental health sector, sparking a controversial yet necessary debate.

To understand the future of therapy, let’s first explore its past to know its origins.

A 32-year-old, Hyderabad-based clinical psychologist, Zoya Ahmed explains that therapy has its foundations in psychoanalysis where patients were often encouraged to freely associate thoughts which meant “thinking out loud, a random thought, gibberish, anything and everything” while the therapist listened attentively, offering minimal intervention.

Over time, behavioural psychologists challenged the emphasis on unconscious processes, instead focusing on modifying behaviours through structured techniques which later developed as Cognitive Behavioral Therapy (CBT), connecting thoughts, feelings, and behaviours, offering strategies to address mental health issues.

Despite differences in methodologies, research consistently notes the importance of the therapeutic relationship. “The effectiveness of therapy often depends on trust, whether patients feel heard, supported, and challenged at the right moments matters more than the specific therapeutic approach,” says Dr Zoya Ahmed.

AI’s role in therapy

AI has already made significant advancements in mental health care, particularly in delivering structured, protocol-based interventions.

For instance, computerized CBT programs guide users through sessions targeting issues like anxiety and procrastination. “AI, if given the right prompts, can excel at identifying and breaking down cognitive and behavioural patterns, helping patients recognize and manage thoughts. However, blindly trusting AI is problematic. In therapy, we often provide a personalized journey to address individual issues, whereas AI methods can be quite generic,” Dr Zoya further explains.

“What AI lacks is the ability to foster the human connection necessary for building trust and self-compassion,” she adds.

The empathy gap

Dr Shruthi Sharma, a counselling psychologist says therapy involves two critical elements: Understanding the mind through knowledge and skill-building, and then fostering empathy and trust. While AI can support the first element, it struggles with the second.

The Hyderabad-based psychologist stresses that a compassionate therapist can teach self-compassion, something AI has yet to replicate.

Despite these limitations, the integration of AI into mental health care is expanding. Virtual therapy assistants can define mental disorders, explain symptoms, and provide evidence-based techniques. Yet, many experts argue that recovery depends on the relational aspect of therapy, which remains uniquely human.

A threat or a tool?

The possibility of AI replacing therapists entirely raises ethical and emotional concerns. “If AI evolves to replicate human relationships, it won’t just challenge the role of therapists; it could alter how we experience connection itself,” warns Dr Shruthi. This extends beyond therapy to broader roles traditionally defined by human relationships, such as parenting and friendship.

Some alarming trends already suggest a shift in human behaviour. For instance, individuals engaging in virtual dates or forming attachments with AI companions highlight how technology is encroaching on relational domains.

Dr Shruthi notes that “At first it might not seem like a problem but over time, this can make individuals more dependent on these artificial interactions, reducing their ability to manage real-world relationships. This detachment can lead to feelings of loneliness, anxiety, and even depression, as people begin to feel disconnected from the real world. Moreover, when people rely too heavily on AI for emotional support, they may struggle to develop crucial social and coping skills needed in actual human interactions.”

Hyderabad psychologists urge reducing stigma around workplace stress

According to an empirical study conducted by Mohammed Amin Kuhail, Justin Thomas and others suggest that AI chatbots, like Pi, could be effective in the early stages of therapy, especially in situations where access to human therapists is limited. They could potentially offer a helpful solution for individuals experiencing mild to moderate mental health issues.

Another study by Amelia Fiske, Peter Henningsen and Akena Buyx highlighted that AI raises significant ethical concerns including the prevention of harm, privacy and data security issues, and the lack of clear guidelines for the development and integration of AI technologies into clinical practice.

There are gaps in regulatory frameworks and a risk that AI could replace human therapists, exacerbating health inequalities. Specific challenges include ensuring proper risk assessment, respecting patient autonomy, maintaining transparency in AI decision-making, and addressing the long-term impact of AI on our understanding of mental health and the human condition.

Exploiting technical vulnerabilities

Hyderabad-based therapist, Dr Zoya Ahmed mentions concerns beyond therapy and AI’s impact, pointing out that many AI platforms collect and store sensitive personal data, including preferences, habits, emotional states, and private conversations. Unfortunately, this data isn’t always kept secure, and in some cases, it can be accessed by third parties or hacked. Improper data protection increases the risk of cyber threats, such as identity theft, blackmail, and scams.

Additionally, these platforms may lack transparency regarding how they use or share personal information, further exposing users to vulnerabilities. The more personal information shared, the greater the risk of it falling into the wrong hands, leaving users open to cyber-attacks and online exploitation.

Demo

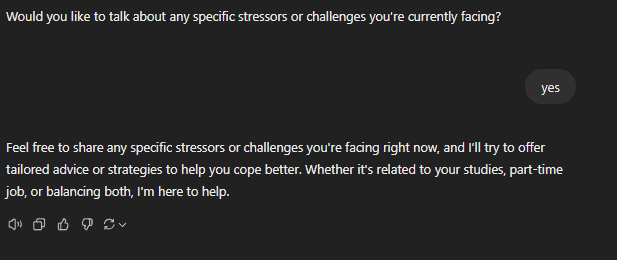

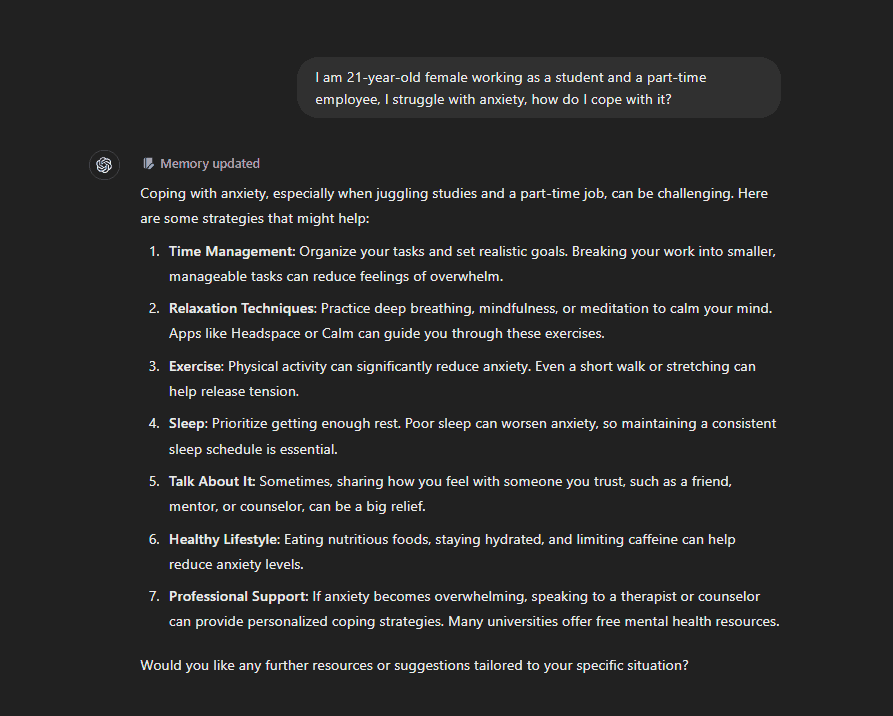

Here’s how ChatGPT, a popular AI tool, provides assistance when asked about a mental health issue.

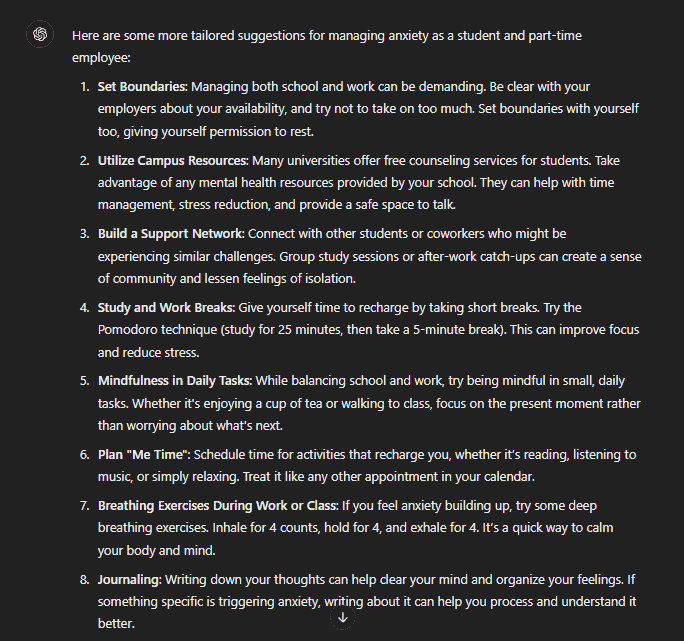

When asked for more tailored suggestions for managing anxiety as a student and part-time employee here is what ChatGPT said:

Chat GPT further inquired, “Would you like to talk about any specific stressors or challenges you’re currently facing?